I started this mini-series with the need to create models that could work with tail events, working with small data and inferential thinking. In our final installment (and probably the densest!), I want to dip our toes into the world of simulations to help us navigate the business problem landscape in this ‘new normal’.

As the world re-opens, businesses are waking up to a new set of decision challenges: how do you plan and make business choices with limited data and increased uncertainty of outcomes? If you have been following the corporate earnings over the last few weeks – there is one dominant narrative: almost every company is opting to avoid putting out any guidance on earnings, growth et al. And rightfully so – there is little a CFO can do to figure out a predictable guidance, leave alone committing it to the world.

However, that does not mean business managers no longer need to make long-term decisions: in fact, if anything, it is becoming even more important that alternative business choices are evaluated and actioned at a faster clip. For instance, the Risk Officer in a bank needs to decide on, say – how should the bank extend loan repayment waiver options to their home mortgage customers? Or how should the customer risk models be re-calibrated for existing customers – and how should that drive the options available for client advisors to engage with the customers? We are bound to see a shift in the importance (greater financial implications) and the urgency (reduced time to decision) of questions.

Which then brings us to the point: how do we model the impact of such scenarios in meaningful ways to provide the right decision support? We would like embrace the Bayesian inferential mindset: start with an initial belief or a model of say, the probability of default (prior distribution) and then learn from data (estimate the likelihood function) and use that to update the model (posterior distribution) However, the challenge is apparent: what if the data is noisy (i.e. your observations don’t help you in converging on a distribution?) and ends up skewing your initial model in the first place. Which is precisely what is happening out there – as each month passes by, the uncertainty keeps going up in dramatic ways and instead of converging to a ‘new normal’ (a stable posterior distribution), the models seem to get less reliable.

Might Markov help?

Once again, a quick diversion into the history of mathematics, and forewarned that this paragraph can get a little dense – but as it usually happens, the underlying concepts are extremely intuitive.

In the 18th century, Jacob Bernoulli (of the prodigious Bernoulli clan) laid down the ‘weak law of large numbers’ which proved that as the number of observations keep going up, the sample behavior (mean and standard deviation) will converge to the population assuming the individual observations are independent. This has been the bedrock of the traditional statistical modeling ever since. In fact, most of the standard regression models that data scientists have been building over the last several years have been built on this principle (see my post on this) and generally speaking, this works just fine. Except of course, real life tends to be messy (!): more often than not, the independence assumption doesn’t hold. There is where Markov comes as an important guide in our journey.

He was one of the many brilliant men of Science and Mathematics who came out of Moscow from late 19th century onwards (yet another fascinating story of a cluster effect from St. Petersburg) – his fundamental insight was that the law of large numbers could be applied to a sequence of dependent random variables which satisfy the property: the probability of a certain state being reached depends only on the previous state of the chain.

This is the memoryless property (i.e. the previous event captures the entire history up until that point and that alone, is good for us to compute the probability for the next event. In other words, p(x5|x1,x2,x3,x4) = p(x5|x4)). This was a brilliant insight because it opened up statisticians to the possibility of studying real-life phenomena: from the transmission of genes to individual behavior to weather predictions and perhaps most famously, the Google PageRank algorithm.

However, Markov chains tend to be computationally intensive, which is one of the reasons they didn’t go mainstream in business statistics – but now that constraint is no longer there. This gives us an interesting way to model human behavior – our choices over time can be modeled as a Markov chain – in terms of the current state and a transition probability. Simplistically put, if we can converge on a transition probability function, we can create a ‘walk’ of choices and outcomes over time. And in cases where we do NOT know the transition probability function, we could simulate with different options until we converge on a distribution that works for us. With that, it would be possible for study the evolution of behavior (e.g. probability of default; or decay rate of campaigns over time)

If your head is spinning by now, don’t blame yourself. Here are the steps:

- Bayesian Inference: start with an initial distribution and we need to converge on a posterior distribution based on observations

- To estimate the likelihood function from the observations, simulate scenarios with different parameters. Assume a Markov chain behavior to generate simulations for each parameter (each set of parameters is a scenario)

- Run simulations till you converge to a set of parameters that capture the posterior distribution. This would help you model how the real-world scenarios play out.

So what does this mean?

Back to our Risk Officer’s problem. As she continues to be faced with these difficult decision choices – we are beginning to discover the power of simulations. A class of algorithms called MCMC (Monte Carlo Markov Chain) simulations could help answer some of the questions: How will an individual customer’s risk score change over the next few months? How will an additional month’s waiver of the loan installment impact the overall lifetime economics of the loan?

As the data scientists continue to rely more on AI and data to help in decisions – my advice always remains the same: ask the hard questions of your data scientist, push them hard to explore different methods – don’t settle for OLS. And strive to be Bayesian: don’t let go of business intuition: remember that AI is not Artificial Intelligence but Augmented Intelligence.

Next week, I want to take a step back from this mini-journey and discuss what this means to how an organization should think of Data Science – I believe the time has to come to grow out of the world of supervised regression models (we shall call it Analytics 1.0). And that starts with upgrading the Analytics talent and organization model.

Further reading:

Markov models are heavily researched now – lots of reading material. Good place to start and follow the links – it is Markovian 🙂 https://en.wikipedia.org/wiki/Markov_model https://en.wikipedia.org/wiki/Markov_chain_Monte_Carlo

The life and work of Markov. Posterior guess: he may have had an OCD streak in him – could be why he got after the conventional wisdom of the sanctity of independence condition of events. http://www.meyn.ece.ufl.edu/archive/spm_files/Markov-Work-and-life.pdf

A tangent on Simulations. This was a problem waiting to be solved – expect the economists to turn up their noses at it https://www.technologyreview.com/2020/05/05/1001142/ai-reinforcement-learning-simulate-economy-fairer-tax-policy-income-inequality-recession-pandemic/

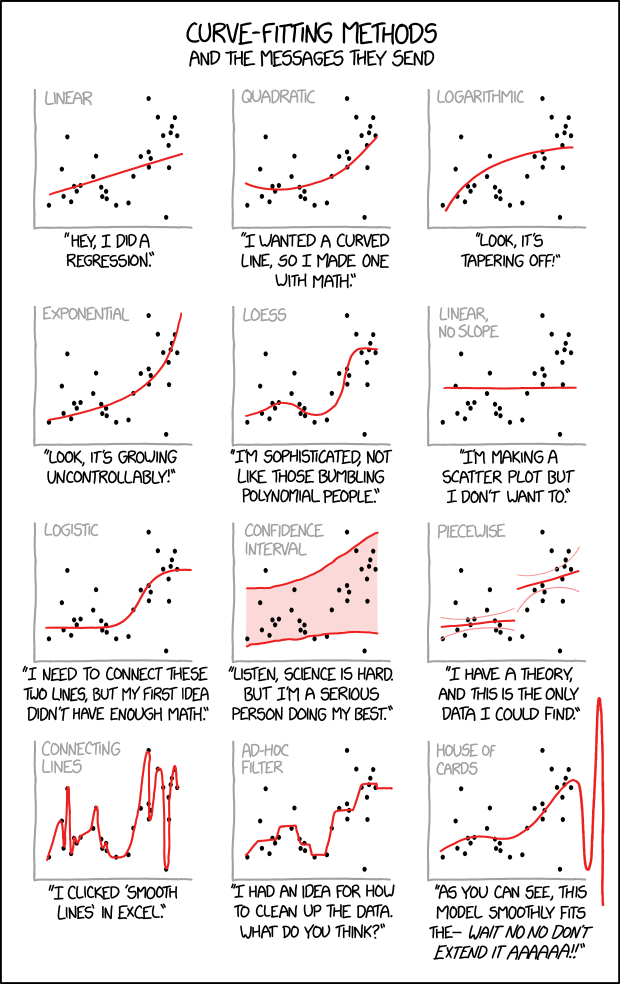

And to sign-off on this series, here is one of my favorite cartoons from one of my favorite sites (xkcd.com). Pretty much summarizes where the current data science capability will end up if they don’t learn what it will take to navigate the new world ..